This is the final post of a 5 part series outlining my journey to

getting SQL Server databases under source control and into a continuous

integration environment.

The other posts are:

Day 1 - Powershell & Scripting Objects

Day 2 - Making Use of a Build Server

Day 3 - Database Developer Toolkits

Day 4 - Adding Value to Continuous Integration

Putting it all together

My journey is at an end and I finally have a working solution which has the following components:

1) A SQL Server dedicated to Continuous Integration

2) A build server/agent with Team City & Final Builder

3) Red-Gate Developer Toolbelt installed on the build agent and the developer machine

4) SVN as my version control choice and databases under Red-Gate source control

5) Tests written with the NUnit framework

Below I have outlined the full process with a few supporting screenshots to help show how things work. This isn't intended as an explanation of all the technologies used, but merely how I have bolted the pieces together to serve my purpose. Further reading can be found by visiting the wesbites of the software vendors.

SQL Under Source Control

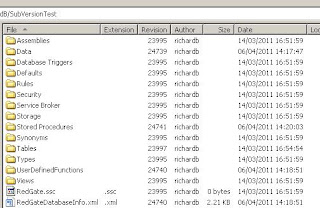

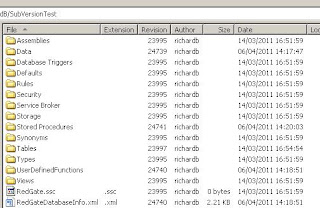

Here we have the database SubversionTest under source control using RedGates SQL Source Control. Nothing too surprising there, just a set of directories with scripts.

With the database linked to SVN, adding the object dbo.NewTable to the database gives a visual aid to let you know changes need to be commited.

On browsing to the SQL Source Control tab, you get to view the DDL for the new object and, if the object has changed, the DDL that exists within SVN.

Here is the generic FinalBuilder script I use to deploy the database to the continuous integration server. Its very simple and just drops the database if it exists, creates a new one and then uses the red-gate compare tools to deploy the database objects. I've wrapped these up in a batch file for maintenance but they don't do anything clever and they just use a combination of the switches which the sqlcompare.exe's support.

When a "build" is run, it also runs some tests. Again, I won't delve into exactly which tests are being run but this just demonstrates the sort of screen you may see subsequent to the deploy of your database. Of course, these tests are run against the Continuous Integration server and are written using the NUnit framework - something which TeamCity supports. In this instance we only have a couple of quick tests to sanity check the two objects but on more complex apps these could take a significant amount of time.

Finally, we have the artifacts generated by the process in the form of some sql scripts which will bring a production server (which the sqlcompare has run against) to the latest build we have in source control. There is also a supporting HTML file which is just a graphical representation of the changes that will be made by executing the sql script.

To ensure that the development is as bug free as possible, I've hooked the CI to occur whenever a database change is commited to SVN. This will give you confidence that the changes made have not caused any issues and at the very least haven't lead to an invalid database build. It may be that you are able to run a full run of an application which may not be suitable for "on check-in" so its possible to schedule some builds/tests to run during quiet time.

Taking it even further

If you ship a database enabled product, one of the real benefits of a solution such as this is that you can quickly and easily target different versions/editions of SQL Server. It may be that a client runs a particular hotfix of SQL Server and wants to know whether this can be supported. If you can quickly provision an instance with to mimic that of a client, you can easily point your TeamCity projects at the new instance and see if your database builds and runs successfully.

I've talked

before about using extended properties to version your database objects and you can use this automated process to help that. SVN has built in revision numbers to when docs get checked in so you could easily set up a build step within teamcity to run a T-SQL script to update extended properties with this number. I haven't done this but will definitely be experimenting with it.

The final word

Hvaing worked with databases out of source control for sometime, I'm really excited about how this is going to improve the quality of our database development going forward. The tools that Red-Gate have provided are easy to use and the simplicity of them is whats so impressive. I have hardly had to change my working practices at all and know that i'm already in a better place. Time will tell whether the versioning and targeting of different database editions takes off but I know that its not going to be a big challenge to implement.

I hope this has been useful to you and would be interested in hearing from people who may have implemented similar solutions or have any ideas on how to make this even better.

References

Red Gate SQL Source Control

Red Gate SQL Toolbelt

FinalBuilder

Team City

Microsoft Visual Studio

Microsoft SQL Server

Powershell

NUnit